Password Policy Attacking Costs, part 2: Practical Problems

- By Miloslav Homer

- Sun 10 March 2024

- Updated on Fri 14 June 2024

Ok. You know what a password is and why you want to crack some hashes since you've read the first part. Now you're interested in actually setting up the whole thing.

As mentioned in part 1, the source code is on Github

Scraping

Whenever you work with data sourced from web pages, you should be thinking about scraping. Why you should do that? If the data source is updated, you just click enter and your experiment runs with fresh data.

What really helped me here was to separate the crawling logic from the parsing logic.

Use caches to prevent abuse of services, Requests-Cache lib is great for these purposes. Not only you might find yourself being rate-limited, but also you always need to wait for the network otherwise.

If you are prone to OOP, I'd recommend having a separate scraper for each url as they are usually completely different. As you'll see shortly, I didn't listen to my own advice.

Benchmarks

Once you find a good source of hashcat benchmarks, you need to parse them. The output is made for human readers, but it's reasonably parsable, I've used a standard finite automaton to achieve this - see here.

The gotchas were:

- unit conversion, simple table fix,

- binding devices to speeds - you need to keep track of numbers,

- inconsistent speed labels -

Speed.#1vsSpeed.Dev.#1, check both.

Overall, this went as smoothly as expected.

Azure Scraping

I need two data sources. The mapping of SKUs (the machine types) to GPUs is in the official docs whereas you can get the pricing details for the SKUs through the API.

Whenever possible, use the API. It's so much simpler. Honestly, that was my main reason for going with Azure. After wrestling with AWS calculator and GCP calculator I couldn't bring myself to finish it.

Tool CLI

I've used my favourite CLI template based on Click.

The glue code is pretty straightforward, utilizing pandas for data manipulation. I found it useful to prepare an ancillary file with hashcat modes, so I have an easier time mapping benchmarks to hash names. You can't generate that file from the tool, but it's included in the repo.

Another slightly more interesting part is the experiment preparation, where I generate hashes to be cracked and the answers. Most hashes behaved well, but it took a couple tricks to make Scrypt work. I selected the parameters that hashcat expected, then I did the transformation to the format that hashcat expected. It's a mess, but it worked. You have been warned, I don't take liability for any eye damage you suffer if you take a look here. Place the hashes into separate files, prepare a log with answers in the same format as hashcat would produce.

However, to verify the experiment, you'd still need to do some sort of cat answers.log cracked.log | sort | uniq -c to really see if you have the full duplicate lines or not as you don't have many guarantees about the order of produce.

The Experiment

Now we are in the business of GPU VM deployments in Azure. You can't just deploy a GPU enabled VM in Azure.

Not all GPUs are in all zones, and not all GPUs are available to rent for the Azure credits. We can't rent spot instances with credits. And those GPUs that I have benchmarked are not in the available list.

So, a new plan is to get any GPUs we're able to get and then recalculate the table of the experiment. I still think the argument holds up well, but you'd need a fully-fledged Azure account. We can still compare the method against the experiment, but the ordeal will be more expensive.

The script

Cue the Hall of the Mountain King.

The manual edits in the console won't cut it for such time-sensitive deployments, so I started thinking about automation immediately.

I thought about using Terraform, but then I scratched it as the flow I wanted to do was very procedural. Of course it can be achieved using TF, but I thought it'd be cleaner just to write a script. That was my first mistake.

Anyway, here's the plan:

- provision an Azure VM of the correct family,

- installation of the required tools,

- running the policies to crack one random hash from each one + display the cracked results for verification purposes,

- TEAR IT DOWN ASAP (these are expensive when left running).

I've also prepared a halved experiment, so that I can test the process on a weaker machine. Debugging takes time; time is money, and money I don't want to spend.

More technical busywork is incoming 🥳🙌🎉🤩. Reminder: this is still simpler and more fun than digging through AWS pricing and GCP pricing.

Azure deploying

Of course, there are more complications.

Debian has got az in version 2.18.0, which has this nasty bug.

az --upgrade correctly assesses that we can go to 2.29.0, but internally, it uses apt and decides that 2.18.0 is enough for my machine.

Oh well. Create a new virtual Python environment and grab it from pypi.org.

You can't just create a VM; you need a networking setup for that. You can find the script creating it in the repo. Fortunately, I am covered well by the Azure docs.

Azure experts can laugh at my silly mistakes such as debian-11 != Debian11 and Standard_B1s != B1s.

Not every GPU is available to you as you already know. However, there's more:

- Even if the region has the GPU machines, they might not be available at the moment, (or for a longer time),

- Even if the region has the GPU machines available, they might not be available to you,

- CUDA is not supported on every GPU there is.

- You can't just use normal deployment, you need to use the Azure Resource Manager model, and they have a custom language called Bicep for those templates!

At this point, I realized how much I love terraform. I still need to wrap it in a script for automated machine teardown, creating an unholy bash abomination, but it is what it is. There's probably a better way of doing this.

Find the source code in the repo.

Shoutout to Armin Reiter for guidelines.

I am convinced that this is still simpler and more fun than navigating AWS pricing and GCP pricing.

Running with a GPU

Of course, I researched far and wide to prepare myself for the costly GPU deployment. I realized most tutorials assume x86 architecture and Nvidia GPUs, so I searched for SKUs that match this combo. I've chosen the NC6s_v3. Not to mention all of the automation above to make this process as smooth as possible.

Yes, I said I wanted to go with spot prices, but I was in a pretty sticky situation. Not only I'd need to figure out how to work with the interruption notifications, I'd also have $200 credit to burn within 30 days. I wasn't confident I'd make it work alongside the usual obligations in that timeframe.

You need a quota increase to receive such a machine in this AI age. That won't happen automatically; you need to raise a support ticket with your request. You request vCPUs, not machines, and since this machine needs 6 CPUs, you are requesting quota raise from 0 to 6 on the NC6s v3 cores, which is slightly confusing. The support was quick and helpful, and I've got the license to deploy.

With my scripts ready, I took a sip of the coffee and pressed enter.

Of course it didn't work at all.

The drivers were FUBAR, so I've decided to switch from Debian to Ubuntu as there are more tutorials for Ubuntues. I've rewritten the scripts after some debugging when I arrived at a working combination. It's not cheap to install all of this toolkit. It has 3GB and a lot of packages to install, which took considerable amount of time and that's costly.

I spent couple hours debugging, but I've got a running hashcat with GPUs now. So the script is now running, and now we wait.

Hey, at least I don't have to look at the AWS and GCP pricing pages to determine their configurations.

Computing

But wait. The script is running in an SSH session - it'd be killed if I log out. Or if the session hangs. Or the wifi wobbles a bit. Oh no.

So I dug out the nohup command.

Now it'd work in the background and I could terminate the SSH session.

In the next step I'd wait for some quick wins to finish, kill the big ones, and nohup them.

After many hours I've realized, that I can't interact with hashcat anymore - you can't just attach a terminal to a nohuped process. Since hashcat is optimized and running in kernel, I didn't dare try recovering that process, fearing it'd crash. The interactivity is gone, I can't see the current speed or progress percentage. I prayed it's working and all I could see was the time elapsed and GPU utilization.

As my budget started to dwindle, I was getting nervous.

Would I make it in time?

Should I throw in the towel?

Surely, this would finish any minute, right?

With these thoughts I went to sleep. In the morning, it was still working hard. Another day has passed and I went to sleep again, with the same set of questions, but considerably more nervous.

Fortunately, it finished and all of the hashes were cracked:

$ cat answers.log cracked_answers.log | sort | uniq -c

2 1f78e1f2ee9d821def239036d1b70ccf:cldbhlzzjzc

2 2598b71373a0ebe0c06bd58170be8355a423fafa6dde91fe1a40343fcffaac8f:>oXSQ<2

2 3e4713caa156cd070ca6a44e82fc1c435089f07ce7264f057634208598de69470dcc69f926e2f1fbcbda8a47b48c7e90a5e508b0d7b2a9d2e55da3dc56496202:1Rys"un

2 5f09a1ed450bb6abd6d8f80efa60b0a3f11482d18344c916afb6551d8465ba97:deadbeef:*`P:_m`

2 82c67ef491352e628f44a3343c66353a:Iv>uuOo(

2 ab32566ce5341e53315cc1712549734dfcc43856:Gvg4f-.

2 SCRYPT:1024:1:1:qFWqVeodw7j3PgdgjDFmzA==:yFrIiWyd4Se12mT1Yf+JUAgoo0UNJq6fBF7nll/ltKI=:FO<wa

Invoicing

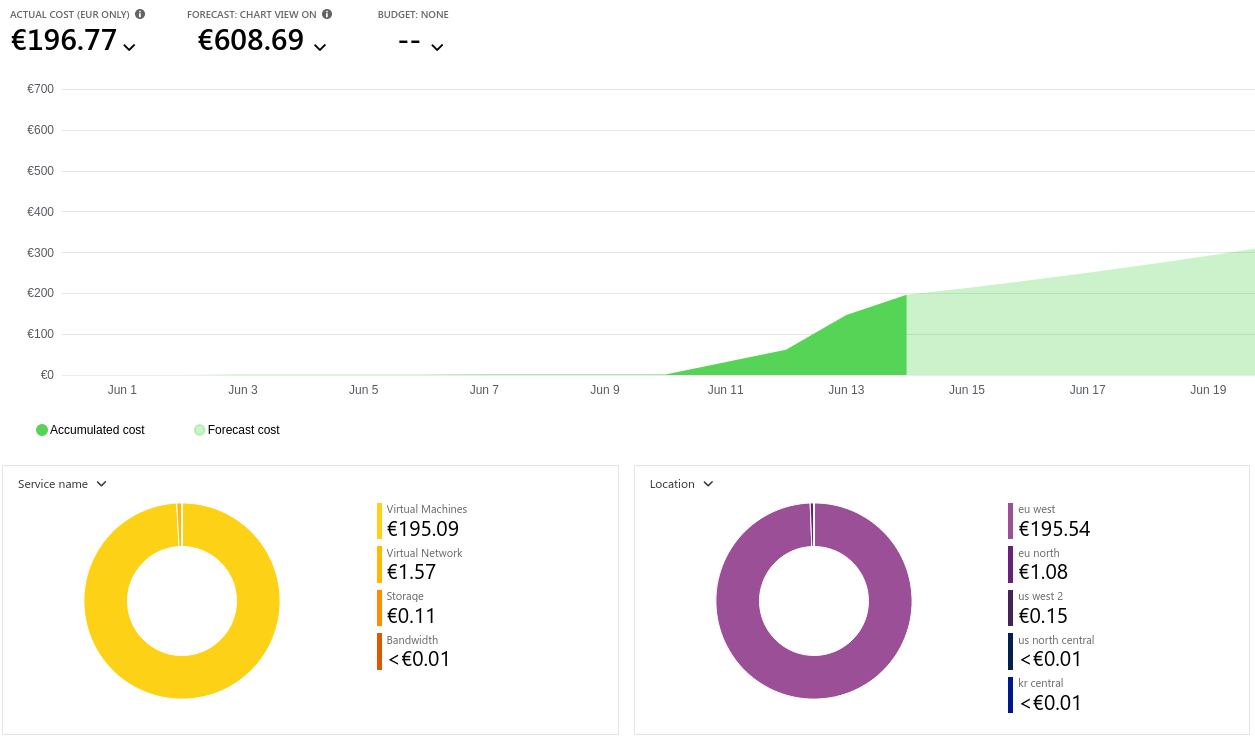

As you can see from the graph, it's impossible to get a real-time read on the cost spent. The estimate seems to me completely off - I know it's just a constant cost of one VM running + some negligible network spendings.

Conclusion

Now you are pretty prepared to deploy your own Azure cracking machine. Hopefully you've find the insights here useful or at least funny. Check out the code repository related to the article at https://github.com/ArcHound/password-policy-calculations. Have a nice day and thanks for reading.